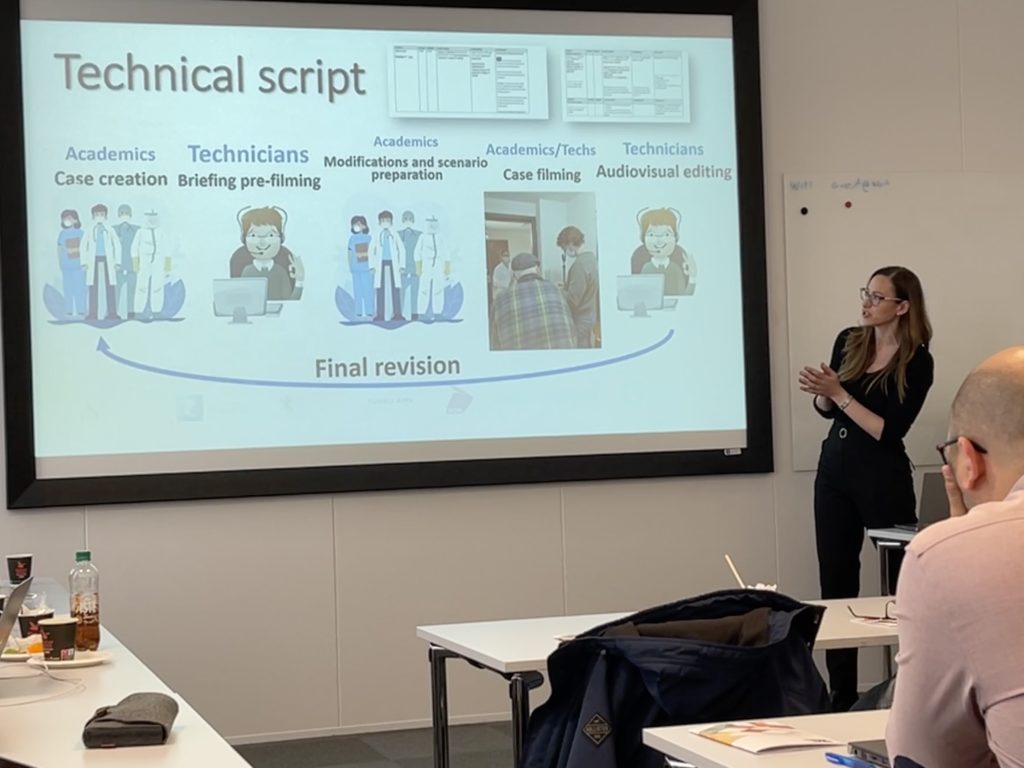

This is particularly relevant as the creation of reusable learning objects (RLO) and immersive simulation scenarios is often undertaken by teachers themselves.

According to official data from Google, about 70% of searches on YouTube refer to the terms “How to do”, which indicates the great demand for audiovisual resources that the population uses to learn to do things, that is, not to learn theory but to learn practicalities. (Google, 2015).

Throughout the 360ViSi project, it is important to note, by emphasis, that everything considered is based upon a clear and sound pedagogical point of view.

1. Pedagogical perspectives on 360° interactive video and related immersive XR-technologies

According to stakeholders like BlendMedia, interactive 360° video technology is beginning to take on the same importance as the revolution of the arrival of video in education, with the difference that this technology also offers students an immersion and a sense of “presence” that enhance empathy and a deeper understanding of the content.

According to various authors, including the well-known Benjamin Bloom (American educational psychologist renowned for the” taxonomy of educational objectives”), the fact that the student interprets, focuses, creates, interacts, and evaluates real situations, will make their learning much more meaningful and long-lasting.

Grossman (Grossman et al., 2009) distinguished between three types of pedagogical practice teachers would use to take students to a supposed practical reality. On one hand, there is representation, through which the student is provided with analysis resources such as video, interviews, etc. On the other hand, decomposition, in which the teacher dissects and analyzes learning through discussion forums, debates etc. And finally, the pedagogical approach, which allows students to approach their future professional reality without necessarily being in it. (Ferdig and Kosko, 2020)

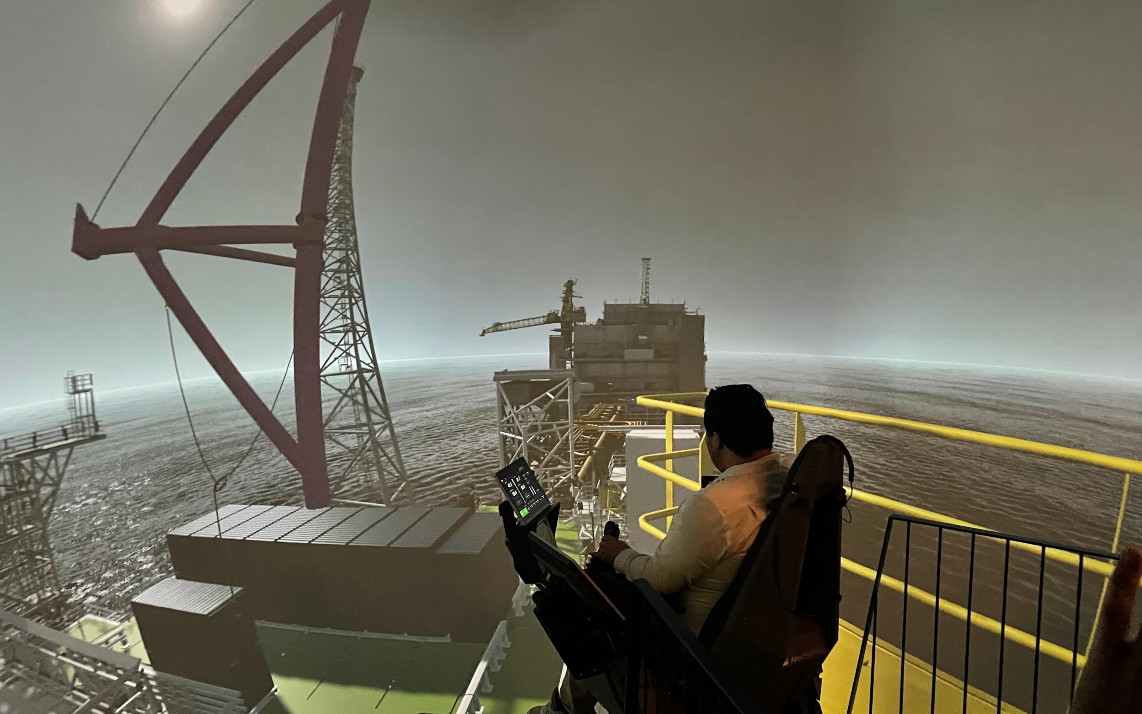

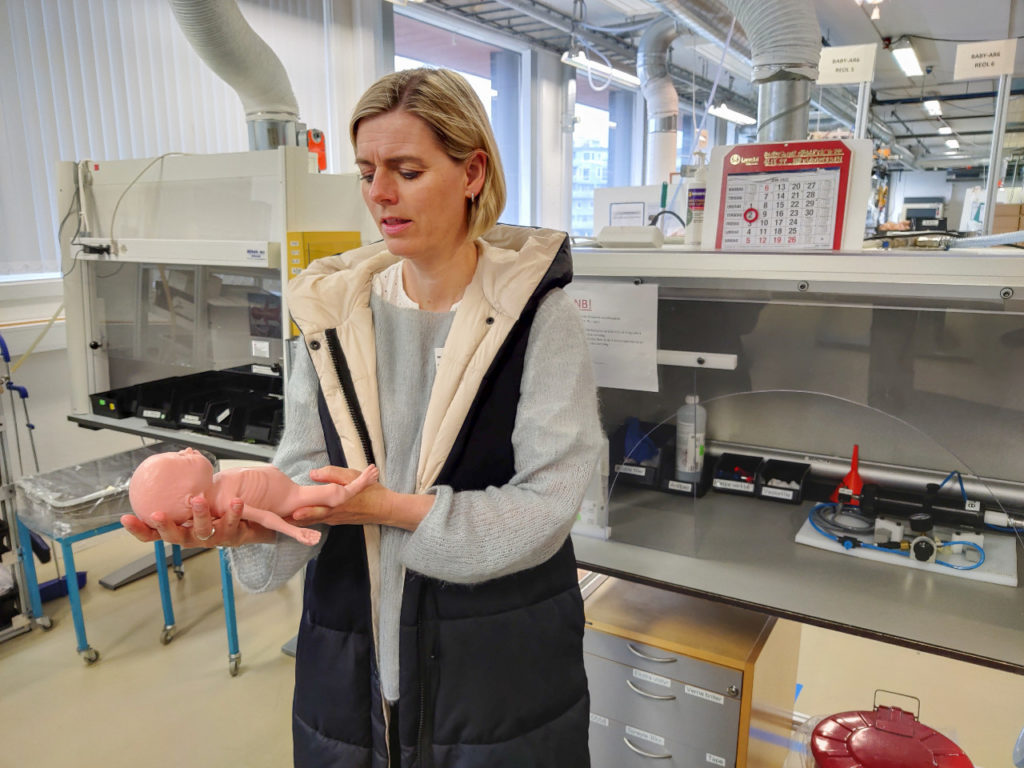

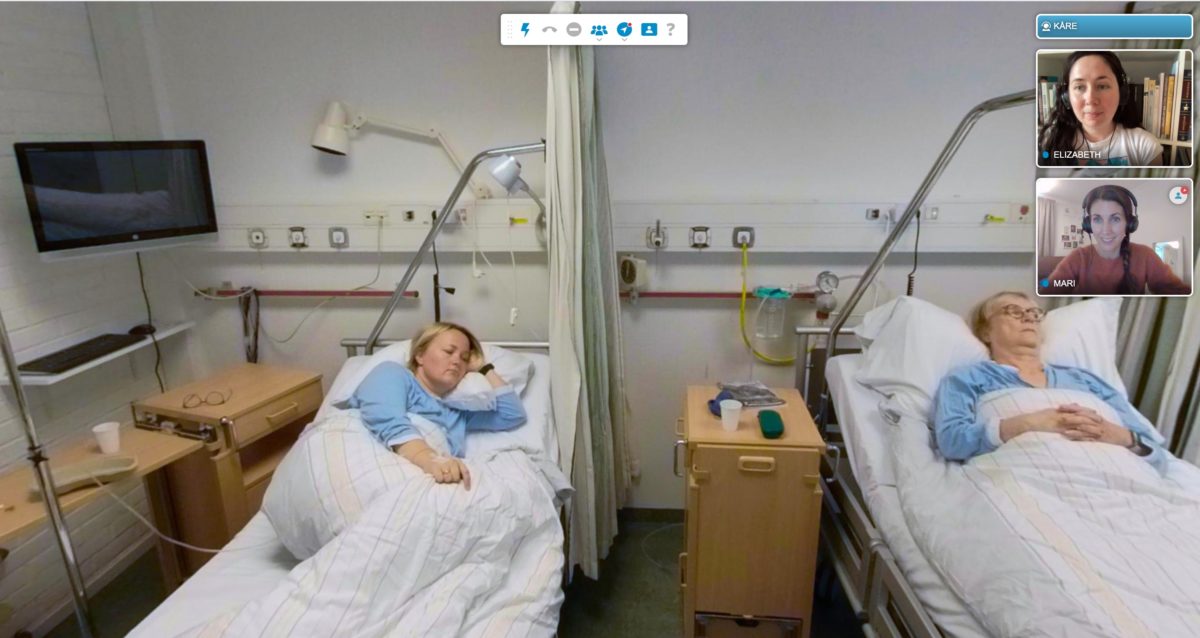

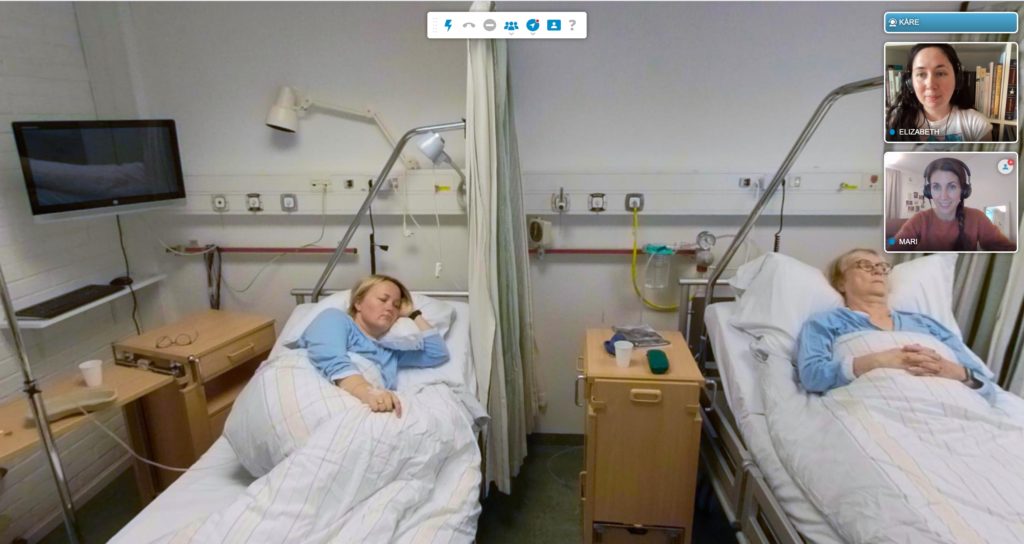

That is why simulation plays an essential role in the learning of health science students (Kim et al., 2016) and the creation of 360° videos through which the student feels immersed in a real environment, not only will it reduce simulation costs but will help the student to abstract from the distracting elements and to focus on the learning objectives.

Immersive environments, such as 360° videos, are extremely relevant in the technological explosion that we are experiencing, given that their potential to improve learning through training from multiple perspectives, situations and experiences has already been proven.

Hallberg, Hirsto and Kaasinen (2020), stated that 360° technology can be used to facilitate learning related to the representation of spatial knowledge, participation, and context, both experimental and collaborative, which make this technology an effective tool for learning practical aspects digitally. The main difference between this technology and others is that 360° technology allows us to create recorded virtual environments so that students can immerse themselves in a situation or action that seems 100% real.

To achieve optimal learning, it is essential that this technology meets the following characteristics:

- That the user experience is satisfactory. That is to say, that it does not cause dizziness, that the recording quality is sufficient for the student to feel that they are “inside” the scene and that the interaction is as natural as possible.

- That the virtual elements do not distort. Many times, overlapping texts or 2D words are configured on the spherical surface, which may cause distortions that distract the student.

Balzareti (Balzaretti et al., 2019) states that promoting reflection among students through decision-making enhances learning effectiveness.

According to Ferretti et al. there are 4 keys to the use of audio-visual media as a reflective method in learning:

- Discover and describe

- Look for cause-effect links

- Exercise analytical thinking

- Identify possible alternatives

It is, thus, important to bear in mind that what students value the most when it comes to keeping their attention on 360° videos, is not so much visual excellence or technological novelty, but rather that they value “enjoying” and “doing an interesting activity”, in fact, when compared to 2D video activities, these are the elements that stand out the most. (Snelson & Hsu, 2019).

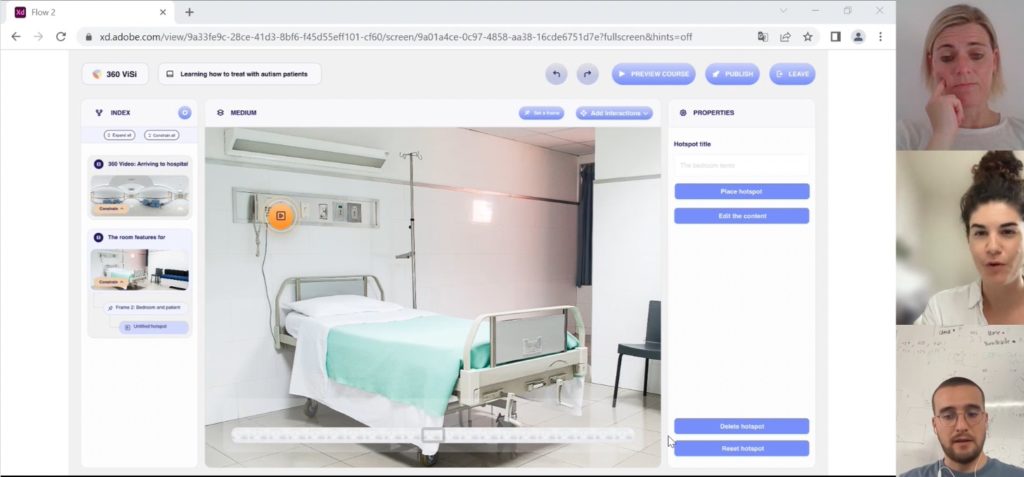

For this, it is important that the audiovisual signals (hotspots) are well designed to direct the attention of the users in virtual environments, since they are key for the effective immersion of the student and the feeling of spatial presence. The lack of such cues can lead to boredom, while overuse of such cues can stop students from focusing their attention by switching from one item to another for fear of missing something important, which would end up leading to frustration and stress.

Regarding the pedagogical benefits of the use of this technology, although there are different studies on it, the one carried out in 2020 by Hyttinen and Hatakka stands out, since they wanted to take the use of 360° video in teaching to the next level, using it to perform live teaching sessions. The results, very positive on the part of the students, reflected some clear benefits, such as not needing physical materials (simulators, objects, actors etc.), which are not necessary for the students, the greater sense of presence in the student’s environment, the use of devices which are familiar to the student (smartphone), a fun learning for the student and a greater concentration on the task, since there are not distracting elements.

In order to achieve these benefits, it is important to bear in mind that there will be a certain percentage of students who would need to bridge the digital divide in order to feel comfortable with the use of this technology. This can be achieved through training, not only for students, but also for teachers (Tan et al., 2020).

As per the duration of the 360° content, although the scientific literature regarding the use of this technology is still not very abundant, a systematic review published in early 2021 by Hamilton, McKechnie, Edgerton and Wilson in the Journal of Computers in Education, shows how there are two types of lengths. On one hand, a duration that is set by the content creators, on the other hand, making the content last as long as the participant takes to complete the task, although the vast majority of studies opted for a short duration of the video (with an average duration of 13 min).

2. A framework for the design of reusable learning objects, including learning objects of 360° media

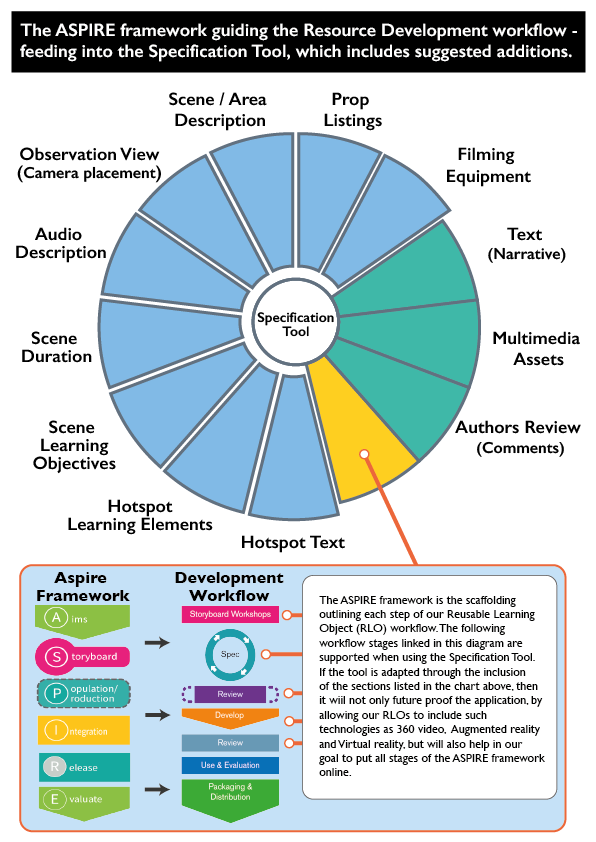

The ASPIRE framework has been successfully applied over many years by the 360ViSi project partner HELM (Health E-Learning and Media Team) at the University of Nottingham to help scaffold the design and development of learning resources, including Reusable Learning Objects (RLOs) and Open Educational Resource (OER) of any medium.

There are many OER learning design frameworks available but the ASPIRE framework is particularly suited for the 360ViSi project and the design of 360° video based RLOs because it is flexible enough to involve a community of practice style of development. It has been employed on many health-related learning resource projects involving health care professionals, patients, carers, charities and other related health organisations. (Wharrad, 2021)

Such stakeholder involvement helps to identify and align the requirements of focused learner groups, this in turn helps to shape resource content and the way it is represented. This ability to share stakeholder knowledge and expertise helps to assist the development of quality reusable learning objects, that can be shared initially by all project partners and eventually with the wider OER community.

ASPIRE is an acronym for the all the steps of the framework involved in RLO creation:

- Aims – helps to focus on getting the correct learning goal

- Storyboarding – allows the sharing of initial ideas and enables a community-based approach to resource design

- Population – combines storyboard ideas into a fully formed specification

- Implementation – a specification peer review is applied, and any concerns addressed before starting resource development

- Release – a second technical peer review is applied and approved before resource release

- Evaluation – for research and development purposes to assess the resources impact

(Windle, 2014).

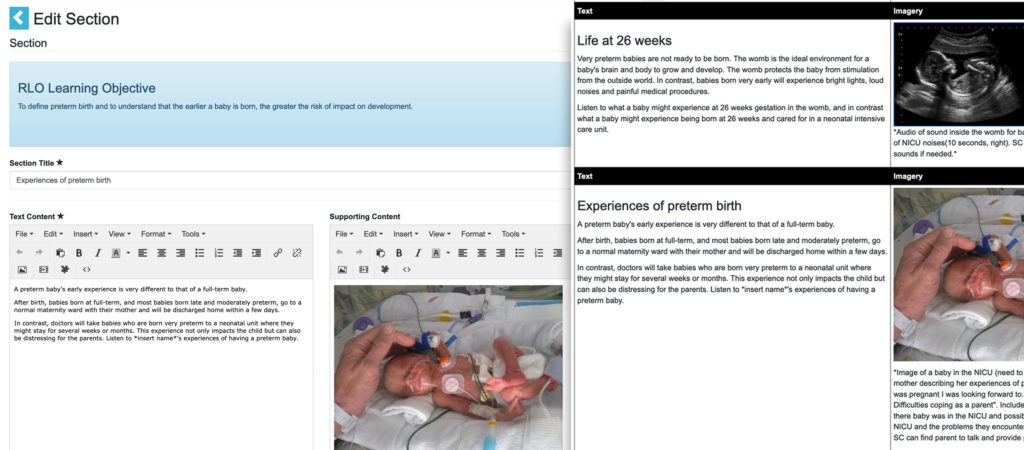

A number of bespoke tools have been developed to help support the implementation of the framework within any given project. One of them is a specification tool – where the content author/s can create a specification to support the ASPIRE frameworks population step and share with the rest of the project team. This allows for the greater inclusion during this important stage for all team members, who are able to review and discuss and suggest multimedia, as graphics and video content.

Once written, the specification can then be accessed as part of a content peer review stage by a subject expert not previously involved in the project. This allows for a review by a ‘fresh pair of eyes’ ensuring that all the necessary content is included, and that the specification is robust enough for development. (Taylor, Wharrad and Konstantinidis, 2022) The inclusivity that this tool provides supports and encourages a community of practice approach to any given project’s development.

3. Sharing reusable learning objects

An online repository is the best way for sharing RLOs across universities and other interested parties and is a digital library or archive which is accessible via the internet. An online repository should have conditions of deposit and access attached.

There are some good examples of online repositories currently available where academics and learners can access, share and reuse content openly released under a creative commons licence.

They include:

- https://www.nottingham.ac.uk/helmopen/, which contains over 300 free to use healthcare resources

- https://acord.my/, allowing open access to resources developed by university staff and healthcare and biomedical science students in Malaysia which have been released as part of the ACoRD project.

- https://www.merlot.org/merlot/, which holds a collection of over 90,000 Open Education Resources and around 70,000 OER libraries. (Merlot, 2022).

For sharing RLOs between different Learning Management Systems (LMS) an eLearning Standard should be used such as xAPI and cmi5. Cmi5 is a contemporary e-learning specification intended to take advantage of the Experience API as a communications protocol and data model while providing definition for necessary components for system interoperability such as packaging, launch, credential handshake, and consistent information model. (Rustici Software, 2021)

References and sources

Balzaretti, N., Ciani, A., Cutting, C., O’Keeffe, L., & White, B. (2019). Unpacking the Potential of 360degree Video to Support Pre-Service Teacher Development. Research on Education and Media, 11(1), pp. 63–69. https://doi.org/10.2478/rem-2019-0009

BlendMedia (2020). https://blend.media/

Ferdig, R. E., Kosko, K. W., Maryam Zolfaghari (2020). Preservice Teachers’ Professional Noticing When Viewing Standard and 360 Video. Journal of Teacher Education, 72(3).

Ferretti, F., Michael-Chrysanthou, P. & Vannini, I. (Eds.) (2018). Formative assessment for mathematics teaching and learning: Teacher professional development research by videoanalysis methodologies. Milano: FrancoAngeli. ISBN 9788891774637. http://library.oapen.org/handle/20.500.12657/25362

Google (2015). https://about.google/stories/year-in-search/

Grossman, P., Compton, C., Igra, D., Ronfeldt, M., Shahan, E., & Williamson, P. W. (2009). Teaching Practice: A Cross-Professional Perspective. Teachers College Record: The Voice of Scholarship in Education, 111(9), 2055–2100. https://doi.org/10.1177/016146810911100905

Hallberg, S., Hirsto, L., & Kaasinen, J. (2020), Experiences and outcomes of craft skill learning with a 360° virtual learning environment and a head-mounted display. Heliyon. https://doi.org/10.1016/j.heliyon.2020.e04705

Hamilton, D., McKechnie, J., Edgerton, E. & Wilson, C. (2021). Immersive virtual reality as a pedagogical tool in education: a systematic literature review of quantitative learning outcomes and experimental design. Journal of Computers in Education. https://link.springer.com/article/10.1007/s40692-020-00169-2

Hyttinen, M. & Hatakka, O. (2020). The challenges and opportunities of using 360-degree video technology in online lecturing: A case study in higher education business studies. Seminar.net. https://doi.org/10.7577/seminar.2870

Kim, J. , Park, J. H., & Shin, S. (2016). Effectiveness of simulation-based nursing education depending on fidelity: a meta-analysis. BMC Medical Education 16(152). https://doi.org/10.1186%2Fs12909-016-0672-7

Merlot 2022, merlot introduction Merlot Organization, viewed 14 June 2022. https://www.merlot.org/merlot/.

Rustici Software. (2021). cmi5 and the Experience API. https://xapi.com/overview/; https://xapi.com/cmi5/

Snelson, C., & Hsu, Y. C. (2019). Educational 360-Degree Videos in Virtual Reality: a Scoping Review of the Emerging Research. TechTrends 2019 64:3, 64(3), 404–412. https://doi.org/10.1007/S11528-019-00474-3

Tan, S., Wiebrands, M., O’Halloran, K. & Wignell, P. (2020). Analysing student engagement with 360-degree videos through multimodal data analytics and user annotations. Technology, Pedagogy and Education 29(5). https://doi.org/10.1080/1475939X.2020.1835708

Taylor, M., Wharrad, H., Konstantinidis, S. (2022). Immerse Yourself in ASPIRE – Adding Persuasive Technology Methodology to the ASPIRE Framework. In: Auer, M.E., Hortsch, H., Michler, O., Köhler, T. (eds). Mobility for Smart Cities and Regional Development – Challenges for Higher Education. ICL 2021. Lecture Notes in Networks and Systems, vol 390. Springer, Cham. https://doi.org/10.1007/978-3-030-93907-6_116

Wharrad, H., Windle, R. & Taylor, M. (2021) Chapter Three – Designing digital education and training for health, Editor(s): Stathis Th. Konstantinidis, Panagiotis D. Bamidis, Nabil Zary. Digital Innovations in Healthcare Education and Training, Academic Press, 2021, pp. 31-45. ISBN 9780128131442, https://doi.org/10.1016/B978-0-12-813144-2.00003-9. (https://www.sciencedirect.com/science/article/pii/B9780128131442000039)

Windle R. (2014). Episode 2.3: The ASPIRE framework [Mooc lecture]. In Wharrad H., Windle R., Designing e-learning for Health. Future-learn. https://www.futurelearn.com/courses/e-learning-health