1. The characteristics of 360° video

360° video or still images immerse the user in a virtually generated environment through photography or a video obtained with 180° / 360° cameras, that is to say, cameras that capture images or footage in all directions and generate a panoramic sphere that the users can turn and explore.

Technically, the 360° cameras capture overlapping fields of multiple lenses pointing in different directions, or from various cameras mounted together in a sphere. Special software is used to compress and join the different images, usually in the shape of a rectangular frame called an equi-rectangular projection.

The result is a still image, or a linear running video, that allows for a view in all directions and multiple modes of visualisation on various devices:

- Through a Virtual Reality device, in which the screen updates the scene being displayed according to the user’s head motion.

- On a smartphone, by shifting your device in the direction you want the video to display.

- On a computer, by scrolling through the window to focus on the different parts of the scene. This is also possible for smartphones and tablets.

- On a conventional screen, in which case the user is not in control of the viewing angles.

The different devices and modes for projecting 360° imagery also allow for different pedagogical applications. Understanding these technical differences will be important in order to maximize the benefits of 360° video used in education.

The focus of the following in this article is 360° video. However, many of the features and technologies mentioned may also apply to 360° still images. And not least for use in education, a combination of these two formats may also be relevant.

2. A comparison: 360° video to VR

360° video can easily be misunderstood as the same as virtual reality (VR). Both are immersive media formats but differ greatly in how complex they are to produce and by their properties for user interaction.

With the irruption and common availability of 360° recording cameras (Samsung, 360Fly, Ricoh, Kodak, Nikon, etc) the already familiar term “Virtual Reality” was used in marketing to imply what could be enjoyed with the new recording devices, as well as the images that they produce. The fact that their images also could be reproduced on Head-Mounted Display (HMD) devices, or “goggles”, that are a characteristic of VR technology, gave support to the use of the term. Still, no professionally accepted understanding of the term “Virtual Reality” apply to 360° video, which should instead be understood on the basis of its own, unique characteristics.

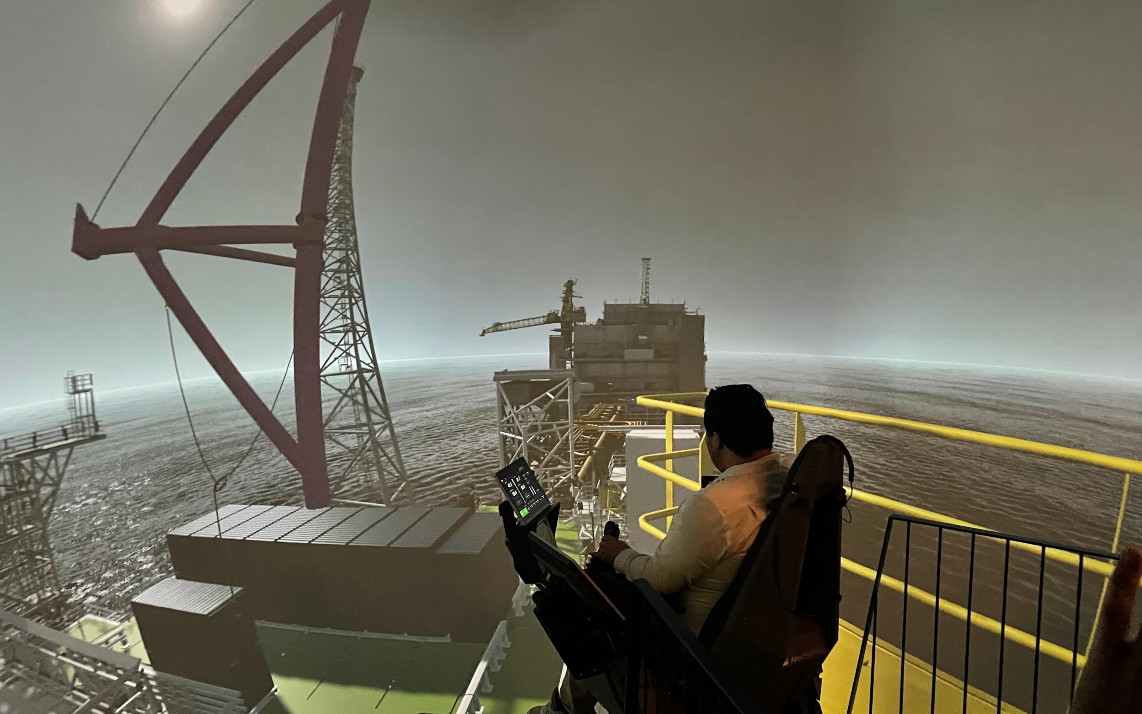

A prominence of VR is that it is made up of simulated environments, synthetically recreated by 2D or 3D imagery, which is exceptional when the need is to portray inaccessible or dangerous scenarios and environments. The prominent feature of 360° video, on the other hand, is its actual ability to photo realistically play back environments and actions as they were recorded in real-time, which is exceptional when the goal is documentation or observation of real scenarios, events and environments.

Understanding the strengths of the two media also provides insight into their limitations. Their visual characteristics are a fundamental aspect. Differences in navigation, interaction, production, playback and sharing are others, which must be considered when deciding how these media can best be utilized as teaching aids.

2.1 User interaction and navigation

The virtual motion characteristics is another of the differences that is evident between the two media of 360° video and VR. Since a 360° video is previously recorded, the user is limited to looking around and following the directed timeline, with no option of movement neither in the virtual space the video portraits nor beyond the time it constitutes. Any movement must be directed at the time of recording. It should be noted that such recorded movements in a 360° video unfortunately may result in an unpleasant, dizzying experience for the viewer who passively watches the video.

Still, from a pedagogical point of view, the fixed movements and timeline of a 360° video may also be understood as a favourable property. In contrast to the open environment of VR, the directed environment of a 360° video constitutes intentional story lines that will aid the viewer to an intended learning outcome. Shorter story lines that are interlinked may very well be used as a strategy to expand the user’s room for manoeuvre, who at certain points in a 360° video can decide which story line to follow.

In VR, the user controls the experience within the created, synthetic environment and the properties it has. Its key feature is that users virtually have the ability to move through the environments and interact with them at their own will.

Between the two media technologies of VR and 360°video, the latter is basically the less flexible in terms of interactivity. However, systems that enable interactivity can be applied to a 360°video by a pointer that can be housed normally in the centre of the viewer, combined also for complementary functions with a physical button housed in the playback device.

Using the 360° video content on a PC-screen will enable an even wider range of opportunities when it comes to hotspots and interactivities. However, other methods of interactivity, such as by the classic keyboard, mouse or joystick, are more difficult to adapt. It is by such devices all virtual, or extended reality media technologies (XR) in general, unfold their advantages — including virtual reality (VR), augmented reality (AR) and mixed reality (MR). Including also motion sensors, the potential of user interaction, e.g. by hand movements, indeed make XR the preferred media if a high degree of user interaction is the goal. However, application of these interactive features in XR normally will entail the need for expert developers, as well as the need for custom playback devices. In contrast, 360° video generally is easier to develop, and will normally require only off-the-shelf consumer tools for recording and playback.

3. Capture, author and playback

3.1 Recording 360° video

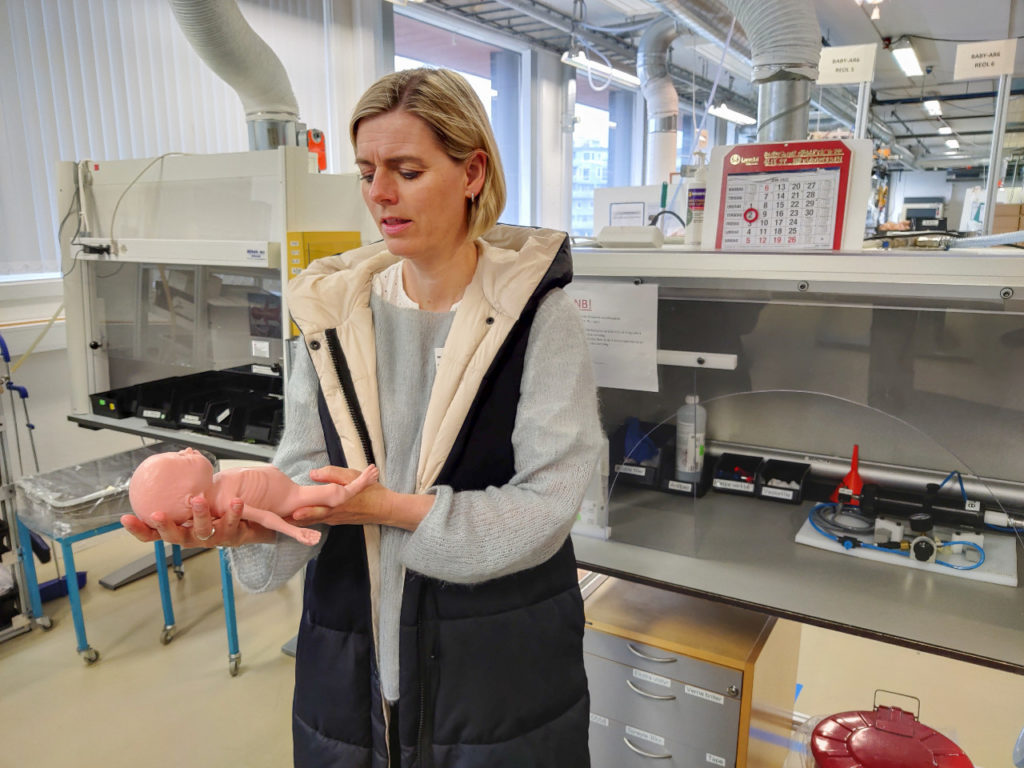

360° videos can be captured with consumer grade 360° video cameras like Insta360 ONE R. There are of course more professional versions that can capture stereoscopic video or more resolution. There are few good practices for recording, but the basic idea is to consider the camera as an observer: Positioning on eye level and aiming lens towards main action.

With device manufacturers software it is possible to convert raw 360° videos in usable format pretty easily without any video editing skills. Afterwards you can use any video editing software to edit your video footage. If you need special effects related to 360° video, you will need special tools. Some video editors like Adobe Premier or DaVinci Resolve, have these options as plugins. When requirements of postprocessing grows, so does the required skill set.

For more information on hardware, programmes and first steps in 360° video producing, the 360ViSi project has published a User Guide on Basic Production of 360-Degree Video.

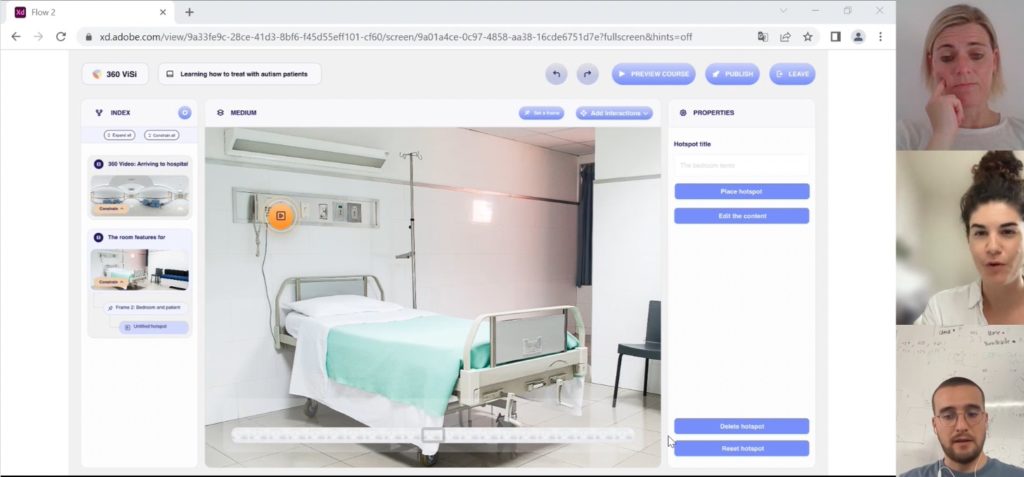

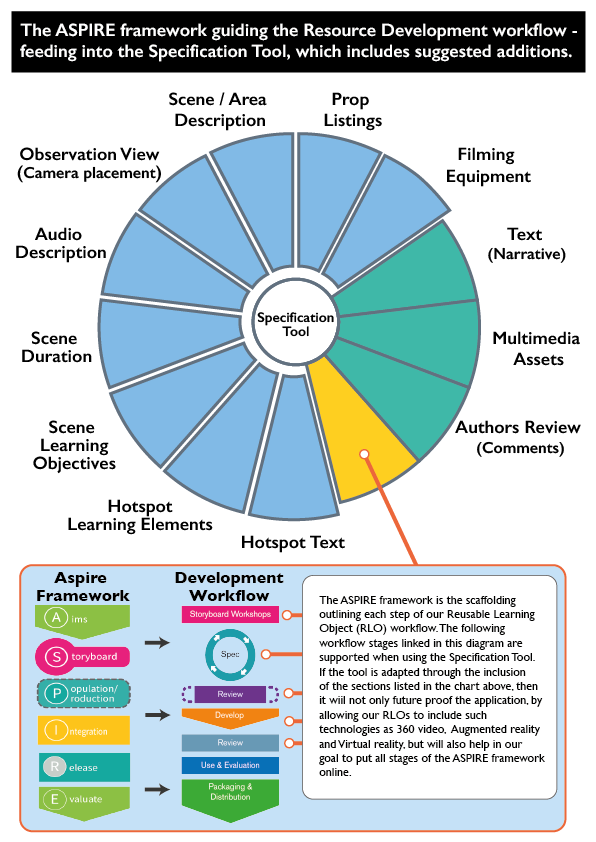

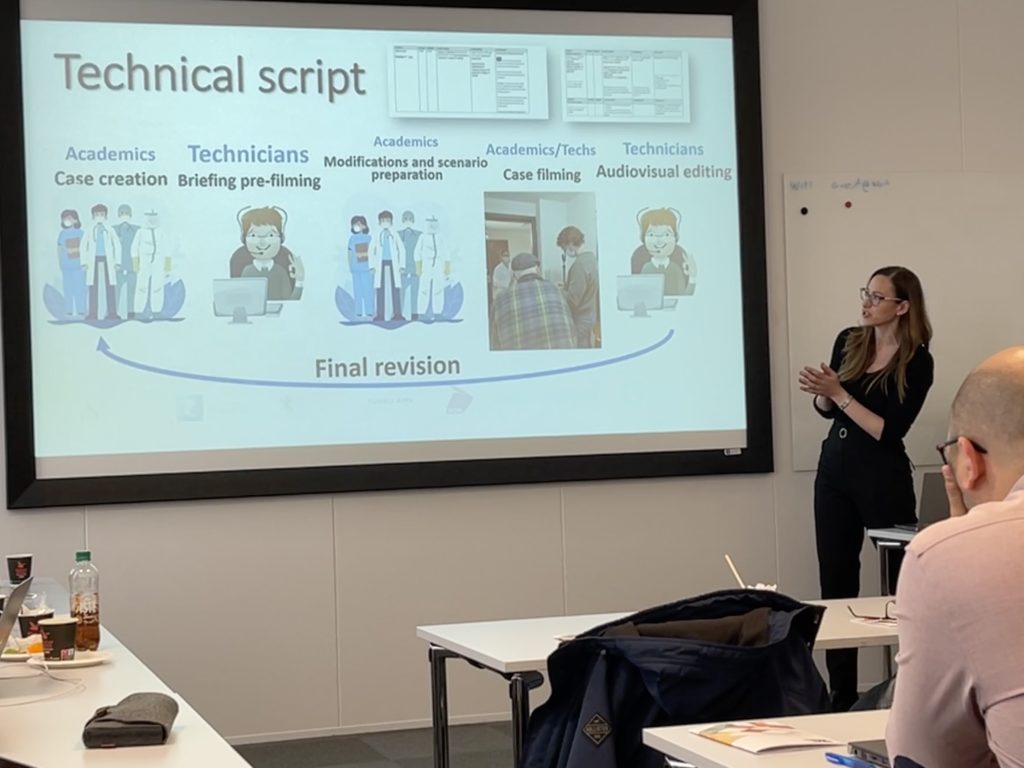

3.2 Authoring an interactive experience

There are multiple ways to build 360° video content into an interactive experience. The easiest way is to use an existing tool, like 3DVista, to create a 360° video tour and also include other embedded content.

These tour platforms usually allow the creator to include multiple action points between which the user can teleport. From these different action points the user sees a different 360° video or image that also can include overlays of other interactive content. Such content can usually be additional contextual images, videos or text information. The 360° videos can also be embedded into a VR application (3DVista, 2021).

Interactive VR experiences are built using 3D game engines, like Unity or Unreal Engine with a specialized software development kit (SDK) added on top. Game engines are suitable for VR development because they already deal with real-time 3D rendering and user interactions. A 360° video or image can be used as the background of the application, allowing the addition of interactive 3D models overlay on the video. The output of VR development is usually a desktop application, but there are options for different platforms (Valve, 2021), as referred to below.

Other XR experiences, like interactive augmented reality (AR) content will in turn require other tools. Augmented reality (AR) is an XR technology that overlays and combines virtual data and synthetic graphics with real images and data from the physical world. Some of the current development tools are limited to the platform the content is built for. Common SDKs for AR development include AR Core (Android) and AR Kit (iOS). These SDKs can be utilized in different development environments, like Android Studio and Xcode, and most common SDKs can also be used inside game engines, like Unity or Unreal Engine. There are also more specialized SDKs for different Head-Mounted Displays (HMD), like the Microsoft HoloLens (Chen et al., 2019).

3.3 Consuming 360° and XR media

Both XR and 360° media can be played on several different types of devices. When choosing a playback device, however, there are several factors to consider, such as the capacity for interactivity, the source from which they collect the information and reproduce it, as well as the availability, ease of use and comfort for the user, and not at least, the price.

For 360° video, there are basically two different ways of consuming. Most easily accessible are flat screens with some touching controller, like monitor with mouse or mobile phone with touchscreen or gyroscope. The other way is by Head-Mounted Displays (HMD), or goggles, as discussed further below. HMDs are more immersive, but also custom devices that often needs to be attached to a computer.

With flatscreen devices the only way to interact with a 360° video is to select by clicking and rotating the view. There is also the possibility to use stereoscopic 3D view on smart phones, with a Google Cardboard like headset that divides the sight separately for the left and right eye.

With VR headsets there is the possibility to interact with the environment through controllers and also move in a 3D synthetic space. These more complex interactions demand more from the 3D environment and are not useful features with basic 360° videos.

Consuming AR content varies between use cases. For more entertainment-oriented or just consumer targeted applications, a smart phone is the go-to device to consume AR content. This is due to AR supported smart phones being common. Still, the custom AR Head-Mounted Displays, like the Microsoft HoloLens, are the most suitable and will give the best immersive experience. AR headsets are however a lot more expensive than VR headsets and not targeted towards consumers.

3.3.1 Head-Mounted Display (HMD) devices

VR goggles for PC: The VR goggles that revolutionised virtual reality was the Oculus Rift, followed by others, like the HTC Vive. This type of goggles provides the best user experience, but they require a powerful PC with a good graphics processor capable of emitting satisfactory images.

This type of device is the closest thing to literally being inside a virtual environment. However, such powerful sensation may make the user feel dizzy or unwell. Additionally, the need for cables limits the movements and requires that a distance between two and five metres between the user and the PC should be kept. The installation of the device also needs to be thorough.

Autonomous VR goggles: There are also cordless virtual reality goggles. These autonomous virtual reality goggles have built-in processors, as well as a screen and sensor, all in one. They operate by a WIFI or Bluetooth connection.

To start using this type of device the user simply needs to put them on, and the experience will start. They have buttons that enable navigation and can be configured to choose what you want to see. Most models are compatible with Samsung and the applications in their catalogue.

Recently, the Oculus Go has been commercialised with LCD screen and an integrated sound system that achieves absolute immersion in the VR experience. Oculus also has a more advanced standalone headset line-up with the Oculus Quest and the Oculus Quest 2 (Oculus, 2020). HTC is also preparing its Vive Standalone, a highly anticipated model, with its own platform and a Qualcomm Snapdragon 835 processor.

VR goggles for smartphones: (Google Cardboard and VR Gear): These devices are the cheapest option although the experience is obviously not the same as with the custom goggles. The way to use them is simple. A smartphone is installed in the goggles case, and thanks to the lenses of the goggles that make the 3D effect possible, the user will get a feeling of immersion in virtual reality. Their appeal is the simplicity, ease of access and low cost.

With a common smartphone, virtually everyone has the opportunity to enter an immersive environment. Only a cheap cardboard case to house the smartphone is necessary either to play a 360° video from a video platform or an application designed for audio-visual immersion (Google, 2021).

3.3.2 Notes on the future technology of XR media displays

One feature steadily improving in 360° videos and VR headsets is the resolution of the cameras and screens, which alone is a feature to notice that will make a learning experience more immersive.

Replacing the old Oculus standalone VR headset Quest 1, the Oculus Quest 2, released in October 2020, will improve the entry point for VR headsets. The new model will have higher resolution, refresh rate and improved processing power.

For PC supported VR, the HP G2 Reverb headset was released the fall 2020. The HP G2 Reverb has a much greater resolution than any consumer grade VR headset so far. It also follows Oculus Rift in the regard of removing the need for additional sensors.

With the new PS5 coming out soon, the possibility for a PSVR 2 is likely. This may expand the market for a consumer-friendly alternative to VR.

Manufacturers like Nvidia and AMD are always pushing the limits for what a graphics processing unit (GPU) can do. With the recent release of Nvidia’s RTX 3080/3090 it opens for better graphical fidelity in VR, which enhances the immersive experience of VR.

4. Collaboration in 360° and XR media

Real-time communication and multiuser interaction in synthetic virtual environments is common, typically in real time online games. This feature of XR-technology is indeed also useful in XR-applications for education – opening both for instructor led activities as well as collaborative training and simulated activities, where intelligence in the application also may be applied to influence the experience.

A particular benefit is the independence of space, which makes it possible to interact independent of the location of the participants in the virtual activity.

Another characteristic is that the participants will have to be represented in the virtual environment by an avatar. For some activities this may be an advantage, particularly in simulations. For collaborative activities it is likely to be the opposite, as well as a complex and expensive solution for an activity that better may be solved by consumer, online services for live video-based collaboration.

Recently, several services have been developed for the transmission of real-time 360° video. This solution is still not very much explored, but may indeed prove to open for new ways of both remote collaboration and educational activities ‒ where all participants individually can control the camera viewpoint remotely as they like.

During the lockdown of the Corona pandemic this technology as an example, has been reported to be very useful in remote site inspections and surveys. The platform of Avatour is one such promising online service of real-time 360° video conferencing launched in 2020 during the first spring of the Corona lockdown. The service is presented by Avtour as “…the world’s first multi-party, immersive remote presence platform” (Avatour, 2021).

Also in 2020, 3DVista, a leading provider of 360° video services and software, launched another approach to remote collaboration in a 360° video environment called “Live Guided Tours”. Every participant can navigate the tour as they like or follow the lead of the one controlling the tour. Building on the features of 3DVista’s 360° video editor, the experience may also include multimedia interactive hotspots, enriching the experience (3dvista, 2020), which is particularly relevant for educational purposes.

The latest addition to interaction in an XR immersive environment is Metaverse. In late 2021, Facebook launched Metaverse as their ultimate vision of a social media platform, which is supposed also to include Meta Immersive Learning explained to provide a “…pioneering immersive learning ecosystem”. By the addition of immersive technologies like AR and VR, the claim is that learning will “…become a seamless experience that lets you weave between virtual and physical spaces” (Meta, 2021).

It remains to be seen weather Metaverse will live up to its promise, or at all gain popularity among the public. Still, the vision of Metaverse pinpoints the potential of the XR media technologies, including 360° video, and these media as the next step in virtual social interaction and indeed learning.

Have a look at 360ViSi’s review of Meta Immersive Learning.

5. References and sources

3dvista. (2020, May 28). Live-Guided Tours.

https://www.3dvista.com/en/blog/live-guided-tours/

3DVista. (2021). Virtual Tours, 360º video and VR software.

https://www.3dvista.com/en/products/virtualtour

Avatour. (2021).

https://avatour.co/

Chen, Y., Wang, Q., Chen, H., Song, X., Tang, H., Tian, M. (2019). An overview of augmented reality technology, J. Phys.: Conf. Ser. 1237 022082

Google VR. (2021). Google Cardboard. Google.

https://arvr.google.com/cardboard/

Hassan Montero, Y. (2002). Introducción a la Usabilidad. No Solo Usabilidad, nº 1, 2002. ISSN 1886-8592. Accessed October 20.

http://www.nosolousabilidad.com/articulos/introduccion_usabilidad.htm

Meta. (2021). Meta Immersive Learning.

https://about.facebook.com/immersive-learning/

Oculus. (2020, September 16). Introducing Oculus Qeust 2, the next generation of all-in-one VR.

https://www.oculus.com/blog/introducing-oculus-quest-2-the-next-generation-of-all-in-one-vr-gaming/

Rustici Software. (2021). cmi5 solved and explained.

https://xapi.com/cmi5/

Sánchez, W. (2011). La usabilidad en Ingeniería de Software: definición y características. Ing-novación. Revista de Ingeniería e Innovación de la Facultad de Ingeniería. Universidad Don Bosco. Año 1, No. 2. pp. 7-21. ISSN 2221-1136.

Valve. (2021), SteamVR Unity Plugin.

https://valvesoftware.github.io/steamvr_unity_plugin/